Yuta Nakashima

Associate Professor, Osaka University

Yuta Nakashima is an Associate Professor with Institute for Datability Science, Osaka University. He is also affiliated with ISLab, Graduate School of Information Sciencen and Technology, Osaka University.

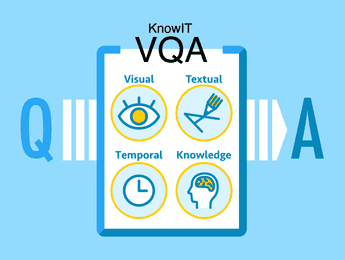

His Research interests include:

- Computer Vision

- Pattern Recognition

- Natural Language Processing

- Vision and Language